The Rise of the Network Commons

Antenna installation at Haus des Lehrers, 2003, image courtesy Freifunk

The Rise of the Network Commons, a book project under development, by Armin Medosch, supported by EU project Confine.

The Rise of the Network Commons is the working title of a new book which I am currently writing. It returns to the topos of the wireless commons on which I worked during the early 2000s. In this new version, combining original research from my German book Freie Netze (2004) and new research conducted in the context of the EU funded project Confine, the exciting world of wireless community network projects such as Guifi.net and Freifunk, Berlin, gets interspersed with philosophical reflections on the relationship between technology, art, politics and history.

Language:

Topic:

The Rise of the Network Commons, Chapter 1 (draft)

The Rise of the Network Commons is the working title of a new book which I am currently writing. It returns to the topos of the wireless commons on which I worked during the early 2000s. In this new version, combining original research from my German book Freie Netze (2004) and new research conducted in the context of the EU funded project Confine, the exciting world of wireless community network projects such as Guifi.net and Freifunk, Berlin, gets interspersed with philosophical reflections on the relationship between technology, art, politics and history. This is the first draft of the first chapter. In the final version, texts may significantly change. Critique and comments are welcome. You can send your opinion either to me in email or ask me for an account to post comments here armin (a) easynet dot co dot uk.

The World of Guifi.net and the Dispositif of Network Freedom

On my recent visit to Barcelona in the context of the Confine project, Guifi.net founder Ramon Roca took me to Gurb, the village he comes from. There, in 2003 Guifi.net was started when Ramon realized that he would never get good bandwidth at a fair price in this remote area in sight of the foothills of the Pyrenees. Ramon, who is an IT professional but keeps his working life and activities with Guifi.net separated, found that he could get broadband by using WiFi to connect to a public building in the outskirts of a nearby small town, Vic. Since then, Guifi.net has grown to become the largest Wifi community network in Europe, with currently more than 25.000 nodes. It is not entirely correct anymore to call it a wireless community network since a growing number of nodes is created by fiber-optic cable. Since Ramon and his collaborators have found out how relatively easy it is to work with fiber he is on a new mission, to get fiber to the curb to as many houses as possible.

Visiting Gurb and talking to Ramon for nearly a full day has revitalized my fascination for wireless (and wired) community networks. I have written a book on wireless community networks in 2003, in German, under the title Freie Netze (Free Networks). The choice of title back then had deliberately emphasised the analogy between Free Networks and Free Software. The title had been inspired by two very different influences. On one hand there had been Volker Grassmuck's early book Freie Software (http://freie-software.bpb.de/Grassmuck.pdf). Volker's magisterial work provided deep insight into the history and politics of Free Software and stood out for me as an example how a book on wirelesss community networks should be written. The other inspiration had been provided by a sweeping lecture in Vienna in June 2003 by Eben Moglen, lawyer of the Free Software foundation and legal brain behind the licensing model of Free Software, the General Public Licence (GPL). Moglen's thunderous and captivating speech had presented the combination of Free Software, Free Hardware and Free Networks like a kind of holy trinity of the everything-free-and-open movement. Moglen's conclusion was that while Free Software was already an accomplished fact, and free hardware was the hardest bit, free networks were a viable possibility, yet there was still a long way to go to attain critical mass.

My book had come maybe a few years too early. When it appeared, some of the most important wireless community networks of today, such as Freifunk, Berlin, Funkfeuer, Austria, or Guif.net, were either inexistent or existed still in embryonic form only. The model of wireless community networks on which my book had been based had been created by Consume.net in the UK. Consume.net was the outcome of an improvised workshop in December 1999 in Clink Street, near London's creative net art hub Backspace. I will describe the history of Consume in more detail below, but one key aspect of that initiative was that it was launched by non-techies. James Stevens, founder of Backspace, and Julian Priest, artist-designer-entrepreneur, provided the impetus for DIY wireless networking by sketching plans for a “model 1” of WLAN based community networking on a napkin during a tempestuous train journey in late summer 1999. Their “Model 1” - a name chosen for its association with Henry Ford's first mass produced car, the Ford Model 1 or Thin Lizzy – was a techno-social network utopia.

The relatively young discipline of Science Studies teaches us that the technical and the social cannot or should not be considered as categorically separated. Technologies are “socially produced” is one of the key phrases in the discourse of science studies. They are not existing outside the human world but are the product of specific societies which exist under specific conditions and circumstances. Technologies are hybrids between nature and society, as science studies author Bruno Latour puts it. Moreover, a specific school of science studies, the Social Construction of Technological Systems (SCTS) has studied the co-evolution of large technological systems and social structures. SCTS pioneer Thomas P. Hughes, who studied the building of the first nationwide electrical grid, has found that there are strong co-dependencies between technological and social systems. While there is undeniably a strong influence on the shaping of technologies exerted by business interests, Hughes' work emphasizes co-dependencies between technologies and the people who build and maintain them, the technologists or techies – a term I will use from now on because it allows to refer to both academic computer scientists and researchers and autodidactic hackers, whereby I hope my use of the term is not seen as derisive in any way.

Engineers and skilled workers involved in large technological projects bring certain predispositions to projects; as projects evolve, the communities of techies develop certain habits and ways of working. The technological and social system build a unity which determines the ways how those technologies evolve in the future. What we can learn from science studies is that neither is science objective (in the strict sense of the word), nor is technology neutral. To believe the opposite would either constitute scientific objectivism - a rather outdated form of scientific positivism – and technological determinism, which is the belief that technology alone is the main factor shaping social developments.

James Stevens and Julian Priest, founders of Consume, are neither scientific positivists nor technological determinists. They conceived Model 1 as a techno-social system from the very start. There ideas combined aspects of social and technological self-organisation. In tech-speak, the network they aimed at instigating was supposed to become a Wide Area Network (WAN). But while such large infrastructural projects are usually either built by the state or by large corporations, James and Julian thought that this could be achieved by bottom-up forms of organic growth.

Individual node owners would set up wireless network nodes on rooftops, balconies and window sills. Each node would be owned and maintained by its owner, who would also define the rules of engagement with other nodes. The network would grow as a result of the combination of social and urban topologies. The properties of the technology - well strictly speaking there is no such thing as property of technology as I just explained but lets reduce complexity for a moment - impose certain restrictions. WLAN as the underlying technology of WiFi is called in more technical circles, operates in a part of the electromagnetic spectrum that does not pass through obstacles such as walls. Therefore, from one node to the next there needs to exist uninterrupted line-of-sight. Node-owners need a way of identifying each other in order to create a link. According to the properties of internetworking protocols each of those links is a two-way connection, which means that data can travel as easily in one direction as in the other. Furthermore, node owners would agree to allow data to pass through their nodes. There would not only be point-to-point connections from one node to the other, but larger networks, where data can be sent and received via several nodes. Such a wide area community network would also have gateways to the Internet in order to allow exchange of information between the local wireless community network and the wider networked world.

Those desired characteristics of Model 1 were not actually invented by Julian and James. Those properties already existed, deep inside the technologies we use to connect, but working for most parts unnoticed by those who use them. The key term has already been introduced above, without further explanation, it is the “protocols” that govern the flow of information in networked communication structures. Protocols are conventions worked out between techies to decide how the flow of data in communication networks should best be organised. The basic protocols on which the net is running, such as the Internet Protocol (IP) and the Transport Control Protocol (TCP) have been defined decades ago by engineers and computer scientists working on the precursors of the net, Arpanet and NSF-net. Some people would go as far as saying that the Internet is neither the actual physical structure of cables and satellites used to connect, nor the content that travels via such structures but it is embodied in the suite of protocols, commonly referred to as TCP/IP (those two are usually mentioned but there are many more). The protocols are the essence of the net because they give it its key characteristics. I am not sure of this is not a very refined form of technological determinism, but I would like to leave this question open for a moment.

The reason for this hesitation is that the protocols are not identical with the technology that uses them. The protocols are conventions that can be described in textual form. The way how this is done is through so called Request for Comments (RFCs). Since the dawn of the net RFCs have been defined in a way that runs counter to common understandings how technologies are created. RFCs are approved by techies who congregate under the umbrella of the Internet Engineering Task Force (IETF). The arcane decision making mechanisms of the IETF have since the very start been governed by maxims such as “rough consensus and running code”. People who develop new Internet technologies present them to their peers who then react by making noises such as humming or whistling. Criteria for approval are not theoretical consistency but weather they actually do something or not. The robustness and the freedom of the net is guaranteed, despite the lack of central coordination, by the self-organised decision making power of those techies who meet at the IETF. While a lot of those people may have jobs with large corporations, when they meet at IETF conferences the still decide as technicians who adhere to their own codes of human responsibility.

It is amazing, because despite the commercialisation of the net this has not fundamentally changed. Corporations and governments may seek to wrest more and more control over the net, and while they are actually quite successful in doing so in some areas, the social protocols of decision making enshrined in the mores of the techno-social communities have so far been able to withstand all such assaults. On the layer of the protocols the net was and is still “free”.

Thus, when James and Julian wrote out the formula of growth for Model 1, they referred to a freedom to connect that is inherent to the way in which the Internet was originally conceived and the way it still functions now, on the layer of the protocols. The knowledge and awareness of that fact had become buried by new layers built on top of older layers in the course of technological improvement but also the commercialisation of the net in the 1990s. Consume.net was started at the cusp of what was then called the New Economy, a stock exchange boom fueled by the rise of information and communication technologies in general and PCs and the Internet in particular. The 1990s had been a very exciting decade which saw the rise from obscurity of the net from a communication technology used by scientists and a small number of civil society organisations, artists and freaks in the late 1980s, early 1990s, to a new mass medium driving and being driven a gigantic economic machinery. In the process, a lot of the properties that had been dear to the early inhabitants of the net, the digital natives, had become either sidelined or overshadowed by commercially driven interest and the secret workings of the deep state.

Model 1 was thus both a new techno-social invention but also a recurse to the original Internet Arcadia. Against the tide of rising commercialisation and the inequalities and distortions that came with it, wireless community networks were supposed to bring back a golden age of networked communication, of equality and freedom. Technical and social properties were conflated into a model of self-organisation. The possibility for that was provided by a small and often overlooked feature of the technology. 802.11b was the technical name of the wireless network protocol as used at about 1999. It allowed two different operating modes, one where each wireless network node knew its neighbors and could receive and send data based on fixed routing tables, and another one, the ad-hoc mode, where nodes would spontaneously connect with each other. The ad-hoc mode was supported by routing protocols that would be best suited for the wireless medium. In a fixed network with cables, it is of advantage to work with fixed routing protocols. When data arrives, the network node decided where to send it, based on its knowledge of the topology of the network. But in wireless networks that topology constantly changes. Nodes can break down due to atmospheric or environmental influences. The quality of connection can change dramatically because of disturbances in the electromagnetic medium. Or a truck parks in front of your house and the line-of-sight is suddenly gone.

For this reason, Consume.net started to get interested in a technology called mesh networking. In the year 2000 mesh network protocols were still very much in their infants. There was a working group called Mobile Ad-hoc Networking (Manet), supported by the US military. In London, a small company was building something called Meshcube. It was a working technology but it was not really open source and only the developer knew how to run it. When Consume.net started to work with mesh network technology, this seemed to be a utopian technology. While neither James nor Julian were techies, they had the support of some very skilled hackers, but neither of them was capable of significantly developing mesh network protocols. Mesh networking was a dream, something that was already on the horizon but not yet there.

This was a pattern established in 2000 and still very much in place in the year 2014: when the problems of mesh networking would be solved, wireless community networks would flourish and become unstoppable. Social qualities, such as self-organization without centralized forms of control, were mapped onto technological properties, such as the ability of machines to automatically recognize each other and connect to build a larger cloud of networked nodes. The idea of network freedom – the ability to connect without having to apply to a central point of governance, and without having to go through a company such as a telecommunications operator (telco) - was supposed to further communication freedom, and thus the rights and ability of people to express themselves and communicate freely without top-down hierarchical control. The convergence of those ideas I call the dispositif of mesh networks and network freedom.

I am appropriating the term dispositif from Michel Foucault who used it to “refer to the various institutional, physical, and administrative mechanisms and knowledge structures which enhance and maintain the exercise of power within the social body” (Wikipedia: http://en.wikipedia.org/wiki/Dispositif).1

Our mesh network dispositif does not (yet) add up to all society, but it is something that is widely shared among techies building wireless community networks. It is a discoursive behaviour, but also a set of believes and a set of material assemblages. Now that, assemblage, is another term that I appropriate freely from a a French philosopher, Gilles Deleuze. While the dispositif does not exist outside time, it is somehow hovering above the concrete historical moment. In this way, the dispositif of mesh networks has influenced wireless community networks since the year 2000. The assemblage, while also consisting of material and non-material components, is concretely manifest in the historical moment. The mesh network dispositif promises to bring about an era of unrestricted and seemless communication, free from technological and social constraints. This dispositif historically legitimates itself by the way the Internet was originally conceived. At the same time it contains the promise of a future when the net will be again what it once had been.

When I came to Barcelona in July 2014, I was thrilled to see that as part of the EU funded research project Confine a project was under way to develop Quick Mesh Project (QMP). QMP is a so called free firmware, a Linux based operating system for network devices. Many people now have at home wireless routers. When you buy Internet access from a provider, you often also get a box that allows to wirelessly connect to the net. QMP would replace the operating system of such a device with a much improved version, one that speaks the language of mesh network protocols. To give a simple example, if in a street of apartment blocks everybody who owns a wireless router replaces the firmware with QMP and the puts the router on the window sill, all those machines would automatically connect and build a network without using any cables or other hardware from commercial providers. It would make it easy and simple to connect without having to go deep into system settings. This has now changed from being a faraway utopian goal to something that is literally around the corner.

It may or may not succeed. One problem with that is that it resembles what Saskia Sassen described as an engineer's utopia. Techies, weather they are academically trained computer scientists, telecommunications engineers or self-taught hackers, tend to believe in the unlimited potential of technology. They see the potential of a technology. There is nothing that speaks against that, on the contrary. It needs such people who are capable of dreaming a different future based on creative bending and twisting of technologies. The problem, however is, that far-sighted techies tend towards a linear extrapolation of technologies into the future without considering other factors, such as politics, the economy, the fundamental differences between people in class based societies and so on and so forth. In this way, the highly productive mesh network dispositif gets turned into the dreamworld of the Internet cornucopia. The technology gets imbued with characteristics that are actually outside it and depend on factors beyond the influence of creative technologists. It becomes a messianic technology in the way the great philosopher of culture and technology Walter Benjamin theorized it in the 1930s.

(to be continued ...)

- 1. The same Wikipedia page further defines the dispositif as “the interaction of discursive behavior (i. e. speech and thoughts based upon a shared knowledge pool), non-discursive behavior (i. e. acts based upon knowledge), and manifestations of knowledge by means of acts or behaviors [...]. Dispositifs can thus be imagined as a kind of Gesamtkunstwerk, the complexly interwoven and integrated dispositifs add up in their entirety to a dispositif of all society." (quoted from Siegfried Jäger: Theoretische und methodische Aspekte einer Kritischen Diskurs- und Dispositivanalyse http://www.diss-duisburg.de/Internetbibliothek/Artikel/Aspekte_einer_Kritischen_Diskursanalyse.htm)

Network Commons: dawn of an idea (Chapter 1, part 2 - Draft)

Good ideas often pop up at the same time at various points on the Earth, they just seem to be in the air. And so it came that around the year 2000 at different points on the globe wireless free community networks were started: Consume.net in London, New York Wireless, Seattle Wireless and Personal Telco, in Portland Oregon, were among the first wireless community networks based on Wireless LAN, or WLAN. Nobody really can say which one came first. I have been lucky to experience the development of Consume and free2air.org in London from a close encounter. Therefore, in this chapter I will tell the story of those networks.

But before I go into the details of this story, it is worth remembering a bit how things were back then. Today, when the debate shifts to a topic such as so called digital natives many young people seem incapable of comprehending that there are middle-aged people like me who have spent a large part of their adult life on-line. I had my first computer in 1985, whereby I should say we, because it was a shared computer between my then girl friend and myself. In 1989 it was followed by two new computers. She got an Amiga 2000, and I got a pre-Windows PC. So I spent a good time learning key commands for the DOS version of Word, while my partner could do wonderful graphical stuff on her Amiga. We could even digitize video, change every single image and turn it into a loop that could be played out and recorded to tape. While I was envious of the slick graphical interface of the Amiga, my PC soon learned a new skill, communication with other computers. That was when the whole on-line fun started.

Actually, we had to overcome a few obstacles first. In Europe, computer modems at the time – around the late 1980s to the early 1999s - had to be licensed by the national Post, Telecommunications and Telegraph company (PTT). This made the stuff prohibitively expensive for many. But we found a workaround. We traveled to West-Berlin and there, in a store called A-Z Electronics, we could buy a 2400 baud modem on the cheap. This modem could be legally sold because it had one cable missing – a loophole in German law according to which it was legal to sell unlicensed equipment if it was not in a state to be used. After we smuggled it back to Vienna, we soldered the cable and connected the modem to the telephone end-connection-point. Franz Xaver, a friend and artist-engineer, had to help to solve issues with the arcane Austrian telephone system. Another friend brought a pack of diskettes and we installed Telix, a program for communicating with a bulletin board systems (BBS).

The BBS world was like a testing ground for virtual communities where certain types of behavior could form. This could be elements of a netiquette, but also an understanding of what it means to be on-line in the first place. Stories about early on-line communities by author such as Sandy Stone1 and Howard Rheingold2 describe how these communities, some of which go back to the early 1970s, foster social (or anti-social;-) behavior.

First artistic experiments with “Art and Telecommunication”3 began in the late 1970s. The Canadian artist Robert Adrian X, who by then was living in Vienna, started an artist's conference board called Artex on a proprietary network in 1980. Fellow artist Roy Ascott described in vivid terms how it felt to be on-line and engage in real-time synchronous communication.

"Over the past three years I have been interacting through my terminal with artists in Australia, Europe and North America, once or twice a week through I.P. Sharp's ARTBOX. I have not come down from that high yet and frankly I don't expect to. Logging in to the network, sharing the exchange of ideas, propositions, visions and sheer gossip is exhilarating. In fact it becomes totally compelling and addictive." (Roy Acot 1984, quoted in Grundmann 1984, p. 28)'

Similar feelings have been shared by almost everyone since who first experienced an always-on network connection. But let's return to the BBS world, which could be quite wild at times. Artists-hackers such as Toek from radio art and performance group DFM circumvented the fact that those systems did not really have graphical interfaces by creating a log-on page with flashing and blinking ASCII animations. Communications in those systems were uncensored – apart from the curiosity of the maintainer of the system – and sometimes one could encounter, without looking for it, cracked software or literature such as the “Hackerfibel” by the Chaos Computer Club, or the Anarchist Hackers Cookbook, or The Temporary Autonomous Zone by Hakim Bey; one could also find software for war-dialling and similar things bordering on what was legally permissive. Thus was created the myth of the Internet as a kind of large DIY bomb-building workshop.

This is a pretty persistent myth by the way, but has maybe more to do with the criminalization of hacking by the US secret services who seemed to be intent on demonizing an activity that many of those involved understood primarily as curiosity, research, interest, gaining new knowledge. When the Internet was opened up for public usage, it seemed to get populated very quickly by all kinds of creative spirits. In 1995, when I had, through work, my first always on “broadband” Internet, the web seemed to consist primarily of artists, anarchists, trade unionists, multinational and non governmental organisations, campaigners for the environment, workers' rights and indigenous groups, as well as the occasional commercial web page of a forward looking company and the standard setting physics department homepage which has been immortalized by artist Olga Lialina with this work "Some Universe": http://art.teleportacia.org/exhibition/stellastar/. Olga Lialina has also collected “Under Construction” signs such as this one, another charming aspect of the early web:

While the on-line world was colorful and intellectually stimulating, Internet access was not that cheap at all at the time. We looked with envy at the US, where local calls were almost free. In Europe you had not only to pay the costs of a provider, but also the costs of the call for every minute you spent on-line. As the 1990s progressed, the modems got faster and maybe telephone provider rates a bit cheaper, but the situation remained fundamentally the same, except in those rare instances, where people came up with inventive solutions.

Cheap Broadband for the Masses: Vienna Backbone Service

In Vienna, Austria, the media artist Oskar Obereder started an Internet service provider almost by accident. With some art school friends, Obereder had launched “A Thousand Master Works”, a project where artists produced multiples which were sold via a poster. Soon, the poster proofed an inefficient method of keeping the offerings up to date. Obereder created a data base and together with some other artists, hackers and the editors of music magazine Skug brought the server on-line, as a web based ordering system. The same technology also supported Skug's data base of independent music. This machine had to be online 24/7, so Obereder and Skug had to get a leased line. In order to share the cost, they distributed internet access throughout the loft-spaces in a former furniture factory where lots of other artists and creative people worked. Everyone who connected to this cable-bound ad-hoc net got the buzz of an always-on Internet and Obereder inadvertently became a provider.

Working together with a small ISP, AT.net, Obereder and colleagues found out about a technology that was coming from California, brand new, and allowed normal copper telephone lines to be used for broadband Internet connections. This was called DSL, and when they first contacted the manufacturer they told them to get lost, because they only sold to telecom providers. Finally, the Austrians got hold of a few modems and started laying the groundwork for what would become Vienna Backbone Service (VBS). This network was offered by three small ISPs as a collaborative effort, but it was also “provided” by many of its first customers who were hosting network exchange services in their cupboards.

Because of the “creative milieu”4 in which Obereder existed, he knew many artists and techies or combinations of those, who had high bandwidth needs and some technical skills. As he by now had founded a company, called Silverserver – later shortended to Sil – they had found out that there was a special type of telephone line that you could rent quite cheaply from the incumbent, and over which you could run DSL. Moreover, the cost was dependent on the distance from the next exchange. Silverserver started finding friends, who were also customers, who lived next to an exchange. In this way, they found a foothold in many Viennese districts, from where they could spread out organically, offering always-on broadband at a tenth of the price, initially, of the incumbent.

In 1998 the workshop and conference Art Servers Unlimited brought together about 40 artists, hackers and activists of all kinds at London's Backspace and the ICA. Obereder was presenting the model of VBS and James Stevens caught an earful of it. What he mainly got out of it was that you could grow a rather large network in a decentralized way, by a collaborative method that involved people taking over responsibility for a node.

Consume – the culture of free networks

Free Networking as social mechanism: Consume workshop with Manu Luksch, Ilze Black and Alexei Blinov, probably 2003

James Stevens and Julian Priest found another inspiration for their “Model 1” (see chapter 1) through the way in which in a particular neighborhood and social environment WiFi was used to share a leased line Internet connection. At the turn of the millennium, James Stevens and Julian Priest had "worked for a decade almost in multimedia, making CD ROMs and websites, running around... then we decided to give it a try, and concentrate on doing more altruistic work.”5

Both had their offices in a special corner of Southwark, the London borough just south of the Thames, in Clink Street, in a small warehouse, directly on the riverside, called Winchester Wharf. Today, oh irony, the ground floor is occupied by a Starbucks. Adjacent to it there were other warehouses, converted into offices and studios for various creative outfits, from record labels to web and multimedia companies. In Winchester Wharf, the web company Obsolete and the Internet cafe Backspace enjoyed a few years of happy coexistence. Obsolete had become successful quickly by making web-pages for Ninja Tune and other record labels located in the same building. After record companies followed some blue chip companies such as Levi who were intent on having a cool, young image. But James Stevens had already opted out at that time.

So he founded Backspace (http://bak.spc.org/), a place at the ground floor, with one window almost on water-level when the tide was high. Fittingly, the homepage of Backspace showed (and still does show) a graphical animation of the river Thames with the web-sites hosted by Backspace floating like half sub-merged buoys in the river. Descriptions of Backspace it as an Internet cafe or gallery just show the ineptitude of common language to describe what it was. It was a hub where people with all kinds of ideas – whether they were related to the Internet or not – came together to talk, organize, share. Backspace was a crucible of London's net art and digerati, where events such as the legendary "Anti with E" (http://www.irational.org/cybercafe/backspace/) conferences and lectures took place. Backspace also became quickly known for its regular live-streaming sessions, at first mainly radio, later also videos, with Captain Gio D'Angelo often in command6.

That was only made possible, because Backspace shared a leased line with Obsolete, who were just upstairs. The other small outfits in the area, on the same street but not in the same building, also wanted a share in the bandwidth Bonanza. At first some sort of Grey area solution was considered, like finding a way of connecting buildings via Ethernet, but that turned out to be impossible, unless one broke the law or dug up the street, legally of course, as a provider company. At some point, someone must have stumbled over WiFi or Airport, as the version promoted by Apple was called. A lot of people in Clink Street were designers and thus Apple users who at the time were the first major consumer computer company who supported WiFi through “Airport” as they called it (See History of WiFi, by Vic Hayes et al 7).

The creative cluster of artists, designers, musicians and entrepreneurs experienced the benefits of broadband and also the laws of network distribution. As Stevens and Priest noted, the maximum bandwidth available is only relevant at peak times, when everybody was on-line, checking into the system, or after work, when people played games or watched videos. Otherwise, the 512K connection, which today would not be considered broadband anymore, was giving everyone enough space to live, listen to music, build web-pages or even play on-line games. But the bandwidth paradise on the Southern shore of the Thames was not to last.

Whinchester Wharf was sold as part of a general regeneration drive of the Southern river bank, at a time when Tate Modern was opened and the whole area underwent a wider transformation. “Between us, we both had an axe to grind when Backspace was closed, we sat together and talked about it and thought it was a good moment to put into practice some of the ideas that we have hatched and some of the things that we have experimented with,” remembered James in 2003. In late 1999, they organized a first workshop to start building Consume, in the offices of I/O/D, one of a web of companies and art groups in the area. For James Stevens, it was ffrom the beginning a "social thing". "The idea that came out was much more straight forward than it looks now, but it was interlinking locations where people work and live using this wireless stuff. We did it already across the street, so that sort of scale where we had a grasp.” (James Stevens, interview with the author, 2003).

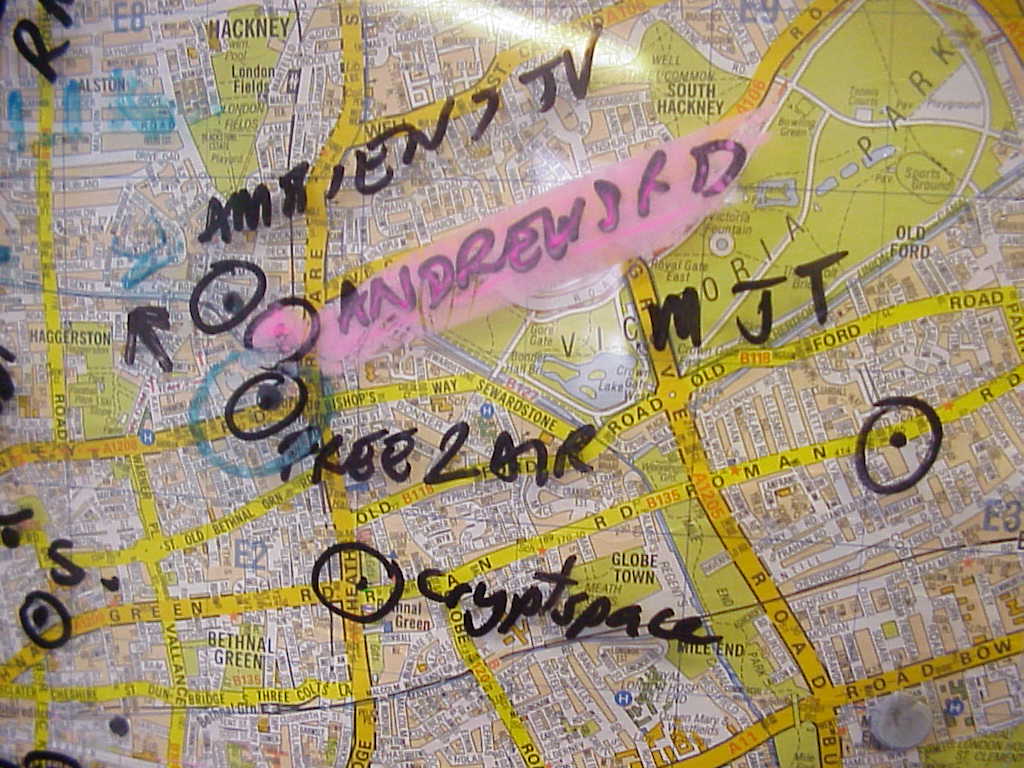

Consume workshop at the studio of AmbientTV.NET, London circa 2003

I received this invitation and remember that I was electrified (although it turned out that I could not participate in that first meeting). I knew that James Stevens was on to something. As he later put it in his own words, "it was on the cusp of a wave of awareness that was sweeping around, also economically we were in a funny state, in a kind of decline of the swell after all of that gluttony of that Dotcom shit." Within the space of a few years the Net had been completely transformed from a colourful space dominated by various leftist and creative types to a place apparently ruled and defined by multinational corporations.

The early WWW had generated a lot of enthusiasm about free speech and possibilities of political self-organisation. It was seen as an electronic Agora, a place where democracy could be reinvented through participatory processes, electronically mediated. Yet in the eyes of the media, all attention was devoted to Internet startups such as Netscape and Amazon who made billions with their IPOs. Ideas about freedom of speech and creative expression, held dear at places such as Backspace, were completely omitted in public discourse. But in late 1999 the stock market boom had started to flounder and in April 2000 the Nasdaq collapsed. Suddenly, the pendulum swung back and ideas about freedom of speech and political self-organisation came back. The call for the first Consume workshop was met with “a phenomenal response” according to Stevens. The question they asked themselves was: the technology had shown to work in a relatively confined area. Could it be made to work over a mile or two? Could different areas be connected into a Wide Area Community Network? Stevens:

. There was a momentum there, in that way, because it grasped peoples attention and got them to come out, literally, just physically to turn up, gather at a meeting, and really, the second meeting that we had, we built nodes. It was really just like as direct as that: physically turn up and do it; those who could handled the Unix side of it, which is not everybody, obviously.” (Stevens 2003)

This workshop must have been in the first half of 2000. What they were out to do, “was to provide ownership of network segments to self-provide those services and in addition to that do all sorts of node-to-node kind of benefits” explained Stevens. But the routing was soon found out to be a core issue to be solved. The nodes deployed in such a network had to mesh and this had to be automatic.

This was a grave problem in 2000, since the Internet by then had been thoroughly commodified, and junks of it handed over to companies, who could defined it as their "country" or Autonomous System, controlling the entry points of the network. This is called Border Gateway Routing Protocol and on such a technical level there is nothing to be said against it. However, it introduces a more leafy, hierarchical structure, which boils down to the problem of exclusive IP numbers in networks. Due to the cascaded nature of networks, with many layers, users in internal networks are often linked via a protocol called NAT. That means, that this router controls the connection to the world, while this node is visible to the world also through this gateway alone. In other words, there is no public visible route to this machine. If a lot of people who share their network connectivity via wireless have such a provider, the routing in the network becomes a problem. There are workarounds for that problem, but this is just one aspect of a protracted sequence of issues regarding wireless and routing. 8

At that point, in the year 2000, mesh networking technology was really in its infants. Through the launch of Consume, a lot of gifted people started to get interested in mesh networking and similar ideas. It is fair to say that community networks took mesh technology out of the military closet and turned it into a working technology (a story which continues today with great intensity and to whch i will return later in this book).

The way how Consume grew, initially, owed much more to the special "genius" of James Stevens than to any technology. "Genius", a term usually resevred for artists or sometimes also scientists, in this context refers to a social skill. James Stevens has a special way of “growing” projects, of initiating them, bringing them into existence but then letting them go their own way. Rather than becoming the leader and figurehead, he tries to initiate a self-perpetuating idea. Maybe this has also something to do with his past in the underground music and squatter scene in the 1980s. Politically, those social scenes were, if not explicitly anarchist, connected with a deep sitting social and artistic liberalism that I found to be much more entrenched in England than in any other country of which I know.

For Stevens and Priest it was a long term goal to “find an opportunity, within the legislation of radio spectrum, to use these domestic computer devices to interlink in a way that it was deemed possible to bypass the local loop” argued Stevens. For him, what became a priority was advocacy, “promotion of systems that create a mesh over the topography. […] You just have to propagate the idea or possibility or potential across the landscape.” And that's what happened in the years 2000 to 2002. While Julian Priest had to take a step back for a litle while for private reasons and because of moving to Denmark, James Stevens and a small but fast growing group of volunteers was building Consume, a self-propgating net. A Consume mailinglist and a website were launched. But the main mechanism for propagating the idea were workshops. There were a number of workshops in spring and summer of 2002, one at the studio of Manu Luksch and Ilze Black, another one at Limehouse Town Hall, which I remember vividly.

The workshops offered something for everyone. First and foremost, they gave people in a particular area the opportunity to meet and discuss the possinbilities of creating a local wireless community network. This involved the social side of getting to know other people in the area. This may not sound like much, but in London talking to neighbours is seen as something quite radical. The only apparently banal thing of "talking to neighbours" went together with exploring the city-scape for suitable locations for antennas and repeaters.

Those who were inclined to do so were building antennas, an activity that showed to be quite attractive for a diverse range of people. It is also something that turns the rather abstract idea of the network into something that can be literally grasped. Antenna building also involves learning about basic physics and the electromagnetic spectrum, which is something very useful in a world pervaded by electronic devices.

Other workshop participants turned to the software side of things. At the time, old computers were used as wireless routers. They were taken apart, reassembled, equipped with network cards, turned into Linux machines and then configured by usage of some bespoke experimental routing software. The issues that posed themselves with regard to routing and networking were publicly and hotly debated which, in my case, triggered a steep learning curve. This was a time when I started to gain knowledge of IP numbers, address spaces, NATing and port forwarding, and, last not east, routing protocols.

Screenshot of Consume Node Data Base of UK in text mode

As Stevens and a core group of supporters traveled up and down the country, workshoping, talking, advocating, Consume quickly developed a national dimension. Networks and nodes popped up all over the country. The vibrancy of Consume was based on the support it found by a wide range of people across the UK. Stevens advocated a model of de-centralised person to person communication, realized via self-managed nodes. De-centralisation was at the core of the idea, politcially as well as technologically. The network was not centrally owned and managed but came together as a resut of the activities of many independent and self-motivated actors. James Stevens at the time argued:

“Creating any sort of infrastructural layer on the landscape, in an environment or the community, that's something that has always been left to the councils or commercial entities, but this is something that can be pulled out from the ground at any level almost really. A school can just decide to put up an access point: utilize, redistribute, in order to legitimately pass the network that it has got from its council network and say its available throughout the school without any wires.” (James Stevens 2003)

Stevens wanted to demonstrate that large, infrastructural projects could be realised in a bottom-up manner, through processes of self-organisation and through the mobilization of social capital (rather than financial capital). This was only possible because Consume attracted some very gifted people, such as the Russian artist-engineer Alexei Blinov, founder of Raylab, later Hivenetworks; hacker-programmer-techies such as Jasper, who programmed the Consume Node data base, and BSD core developer Bruce Simpson; and network admin wizards such as Ten Yen and Ian Morrison. Other people who participated, such as Saul Albert and Simon Worthington, co-founder of Mute Magazine, could be described as non-commercial social entrepreneurs; their strength was also advocy, creating ideas of their own and pulling in people and resources; the same can be said of artists and curators such as Manu Luksch, Ilze Black and myself who, for a while, also belonged to the core of the London free network scene (I will dedicate a special chapter to art and wireless community networks later in this book). Another core participant was Adam Burns, who can claim to have had the same idea, more or less, by himself, and had set up the first wireless free network node in Europe, free2air.org.

You are Free 2 Air Your Opinion

Adam Burns and Manu Luksch explore skies over East London. Photo: David

While Consume had been an early project, as a really existing free network in London it had been preceded by Free2air.org. Free2air.org was the virtual flag flown by Adam Burns, of Australian descent. In his daytime job he managed firewalls of financial groups, in his spare time he had set up an omni-directional antenna on a building on Hackney Road, just above the Bus stop and the Halal Chicken shop. From there, everybody could pick up a signal who was in range.

"To my knowledge I am not aware of any other facility in Europe offering totally open network access like this. I do not want to know the name, the address, the credit card number, the colour of the eyes or hair of anyone who connects through to this network, that's unimportant to me. And I don't feel that this is a necessary requirement." (Adam Burns, Interview with Armin Medosch)

At the time of the interview, which must have happened in autumn 2002, Adam Burns claimed that free2air had been active since 18 months. Thus, from late 1999 or early 2000, Free2air.org, hosted by a machine called Ground Zero, offered free wireless Internet access to everyone passing through. Adam Burns had been involved with small ISPs in Australia in the early 1990s, when providing Internet access was done more out of ethical conviction than business sense. This background has inspired his keen sense of networking as a social project.

“Free2air is a contentious name, but one that I have chosen to use. Basically it has a dual meaning: once you establish such a network the cost of information travel is free. It's not a totally free service to establish, you need to buy hardware, you need computer expertise and so on. But the whole idea of ongoing costs are minimal. Secondly, what I liked about it is the plans for a distributed open public access network. It gets rid of the idea of a central ISP, in other words, globally around the world, when we are talking about the Internet or censorship or pedophiles hanging out, or bomb makers, there is a lot of concentration on what really goes on in networks. When you have got a lot of people passing information directly to each other its very hard to track down what information has and has not passed and how it got air. So there is a double meaning to free2air, it also means you are free to air your expressions without concern or problems in getting that message through.” (Adam Burns, 2002)

East End Net

an omni directional antenna by Consume, a spire and the towers of the financial center, East London 2002

Adam Burns became a central person in the London wireless scene around Consume and what came to be known as East End Net. The idea was launched to connect Limehouse Town Hall with the area around the office of Mute magazine at lower Brick Lane, and somehow to connect also Bethnal Green and central Hackney. That bit was also the place where I lived at the time. While the large version of East End Net never materialised, we had our local version of it, with a connection from free2air.org to the “compound”, a large workspace building for small industries at the bottom of Broadway Market in E8.

With AmbientTV.net's help, the connection was spread by wireless and wirebound through the building. For several years a community of changing size, from between 20 to 40 or 60 people, inhabited a chunk of the net. Due to the social composition of this area, a number of art projects using the free WiFi took place. I will turn to those projects at a later stage.

East End Net: The Original Map

While East End Net was never built in the way it was supposed to, the discussions and the focus that it generated was highly productive in a number of areas. Several lines of flight are taking off from this point, which all will have to be followed separately - so I'll just hint at some of those ideas in overview form. The hand drawn original map of East End Net was the starting point for a lot of ideas about mapping of wireless networks, but also ideas about communal map making as such. It was the time when the Open Street Map project began, as it was recognised that also something as complex as a map could be built in a decentralized way by unpaid volunteers.

Consume's NodeDB, as already mentioned, was a quite early and successful attempt at building a website that facilitates registering a free network node through a wiki-like functionality. The idea was that the database would not only contain technical information about nodes, but also additional information about services offered. In this way, the NOdeDB would become the focus of community development and of micro-ecologies of small business, art, culture, activism.

The communal building of a wireless mesh network over a large part of a metropolitan area also raised issues about ownership and responsibility. While, as we shall see, in Germany the discussion from the very start was dominated by anxiety about legal repercussions of sharing an Internet connection, in London the discussion was about the notion of the commons. It was through Julian Priest that I became introduced to the work of Elinor Ostrom who successfully contested the hegemony of the thesis of the Tragedy of the Commons - work for which she later received the Nobel price in economics. We started to discuss the implications of what it meant to treat the network as a commons and sought to find ways of affirming this status of the network commons.

For me, personally, two fundamental insights emerged from my involvement at the time. Through participating in workshops and talking to techies, I started to understand a bit more what happened behind the surface of the screen when one clicks on a web-page or sends an email. As I gained insight into how networks function technically, I experienced this as a form of empowerment. In my view, everyone should understand at least a little bit about how networks work. Why? Because networking is not just about moving around bits and bytes, it is about communication, freedom of speech, about democratric participation, the freedom to learn things. One big problem that we have in societies such as ours is that the division of labour imposed on us creates categorical separations between things that should be seen and understood as belonging together. Building and maintaining telecommunication networks is seen as a technical task but affects fundamental human rights and social issues. Thus, everybody should have at lest some idea about how it works, as they otherwise cannot meaningfully participate in Network Society.

Thus, as a grand thesis I would like to introduce here, I propose that the involvement of ordinary people in building a network commons has a profound emancipatory effect. In particular, as the process allows people to learn more about the structure and the functioning of the Internet, they gain a better understanding of what they can potentially achieve in societies and, no less im.portant, how to protect themselves from the harmful effects of information abuse by corporations and government. As people learn how networks work they can become teachers of the free network spirit. They will understand that they can become part of the network (and not only be useres of a service provided by a corporation or the state), and can bring to it their own specialisations and ideas. Through that, the idea of the network also gets enriched.

Thus, the second part of the thesis is that free networks contribute to the democratisation of technology. Conventionally, technology is considered to be developed behind the closed walls of research labs. There, gods in white (or jeans and black pollunder) develop the technologies of the futures, which the thankful people then consume as a commodity. The way in which wireless community networks function, that is, the development of cutting edge technology, is opened up to wider mechanisms of participation. This secon part of the thesis is almost confirmed already through the existence of a project such as the EU project Confine. Through the involvement of community networkers in shaping future technologies, those technologies become less elitist, less controlled by narrow commercial or security interest. The original peer-to-peer spirit of the net gets enhanced and made fit for the future in a network commons that is there to protect our democratic freedoms and rights.

Next, Chapter 2: Consume the Net - the internationalisation of an idea (watch this space ...)

- 1. Stone, Allucquère Rosanne. The War of Desire and Technology at the Close of the Mechanical Age. MIT Press, 1996.

- 2. Rheingold, Howard. The Virtual Community: Homesteading on the Electronic Frontier. MIT Press, 1993.

- 3. Grundmann, Heidi. Art + Telecommunication. Vancouver, [B.C.]: Western Front Publication, 1984.

- 4. I have written more extensively about this in Medosch, Armin. “Kreative Milieus.” In Vergessene Zukunft: Radikale Netzkulturen in Europa, 1., Aufl., 19–26. Bielefeld: Transcript, 2012.

- 5. James Stevens, interview with the author, June 2003, private notes

- 6. see article by josephine Berry http://www.medialounge.net/lounge/workspace/crashhtml/cc/23.htm

- 7. Lemstra, Wolter, Vic Hayes, and John Groenewegen. The Innovation Journey of Wi-Fi: The Road to Global Success. Cambridge University Press, 2010.

- 8. Corinna "Elektra" Aichele, a free networker from Berlin, has summed up those problems and possible solutions much better than I ever could in her book "Mesh - Drahtlose Ad-hoc Netze", Open Source Verlag, 2007

Consume the Net: The Internationalisation of an Idea (chapter 2, part 1, draft)

This chapter starts out with a summary of the achievements of Consume.net, London and then traces the development of this idea, how it was spread, picked up, transformed by communities in Germany, Denmark and Austria. The internationalisation of the free network project also saw significant innovations and contributions, developing a richer and more sustainable version of the network commons through groups such as Freifunk.

In London, Consume had developed a model for wireless community networks. According to this idea, a wireless community network could be built by linking individual nodes which would together create a mesh network. Each node would be owned and maintained locally, in a decentralized manner, by either a person, family, group or small organisation. They would configure their nodes in such a way that they would link up with other nodes and carry data indiscriminately from where it came and where it went. Some of those nodes would also have an Internet connection and share it with everybody else on the wireless network. Technically, this would be achieved by using ad-hoc mesh network routing protocols, but those were not yet a very mature technology. Socially, the growth of the network would be organised through workshops, supported by tools such as mailinglists, wikis and a node database, a website where node owners could enter their node together with some additional information, which was then shown on a map. Within the space of two years, this proposition had become a remarkable success.

Consume nodes and networks popped up all over the UK. Consume had made it into mainstream media such as the newspaper The Guardian http://www.theguardian.com/technology/2002/jun/20/news.onlinesupplement1 . The project also successfully tied into the discourse on furthering access to broadband in Britain. The New Labour government of Tony Blair was, rhetorically at least, promising to roll out broadband to all as quickly as possible. This was encountering problems, especially on the countryside. The incumbent, British Telecom, claimed that in smaller villages it needed evidence that there was enough demand before it made the local telecom exchange ADSL ready. ADSL is a technology that allows using standard copper telephone wire to achieve higher transmission rates. The Access to Broadband Campaign ABC occasionally joined forces with Consume. The government could not dismiss this as anarchist hackers from the big city. These are “good” business people from rural areas who needed Internet to run their businesses and BT was not helping them. Consume initiator James Stevens and supporters traveled up and down the country, doing workshops, advocating, talking to the media and local initiatives.

BerLon

In 2002 the opportunity arose to bring Consume to Berlin. Although living in London, I had been working as co-editor in chief for the online magazine Telepolis for many years, so I knew the German scene quite well. After quitting Telepolis in spring 2002, I traveled to Berlin to renew my contacts. The curator of the conference Urban Drift, Francesca Ferguson, asked me to organize a panel on DIY wireless and the city. This gave me the opportunity to bring James Stevens and Simon Worthington to Berlin, as well as nomadic net artist Shu Lea Cheang.

The idea emerged, to combine our appearance at Urban Drift with a workshop that should bring together wireless free network enthusiasts from London and Berlin. Taking inspiration from Robert Adrian X early art and telecommunication projects, we called this workshop BerLon, uniting the names Berlin and London. Robert Adrian X had connected Wien (Vienna, Austria) and Vancouver, Canada through four projects between 1979 and 1983, calling the project WienCouver http://kunstradio.at/HISTORY/TCOM/WC/wc-index.html.

Our organisational partner in Berlin was Bootlab, a shared workspace in Berlin Mitte, where a lot of people had a desk who were interested in unconventional ideas using new technologies. Some Bootlabers were running small commercial businesses but most of them constituted the critical backbone of Berlin's network culture scene. Bootlab was a greenhouse for new ideas, a little bit like Backspace had been in the late 1990s in London. Our hosts at Bootlab were Diana McCarthy, who did the bulk of organisational work, and Pit Schultz, who had, together with Dutch network philosopher Geert Lovink, invented the notion of net-critique and initiated the influential mailinglist nettime.

A little bit of additional money for travel support from Heinrich Böll Foundation, the research and culture foundation of the German Green Party, enabled us to fly over some more networkers from London, such as electronics wizard Alexei Blinov and free2air.org pioneer Adam Burns. And as is often the case with such projects, it developed a dynamics of its own. Julian Priest came from Denmark, where he lived at the time, and brought along Thomas Krag and Sebastian Büttrich from Wire.less.dk. Last not least, there were people from Berlin who had already experimented with wireless networking technology, among them Jürgen Neumann, Corinna “Elektra” Aichele and Sven Wagner, aka cven (c-base Sven).

The rest is history, so to speak. I would be hard pressed to recall in detail what happened. Luckily, the Austrian radio journalist Thomas Thaler was there. His report for Matrix, the network culture magazine of Austrian public radio ORF Ö1 gives the impression that it was a bit chaotic, really. There was no agenda, no time-table, no speakers list. Sometimes somebody grabbed the microphone and said a few words. As Thaler wrote, “London was clearly in the leading role” in what will have to be accounted for under “informal exchange.” Most things happened in working groups.

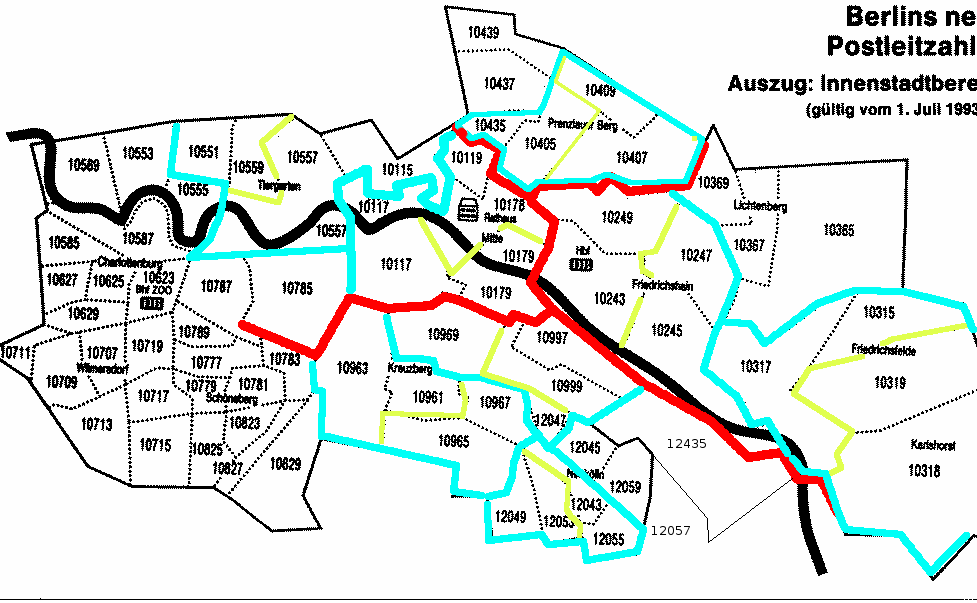

One group was discussing the networking situation in Berlin. There had already been initiatives to create community networks in Berlin, one called Prenzelnet, another one Wlanfhain (Wlan Friedrichshain). As an after effect of re-unification of Germany, there were areas in the eastern side of Berlin that had OPLAN, a fiber-optical network, which made it impossible to use ADSL. What also needs to be accounted for is the special housing structure of Berlin.

As an after effect of Berlin having been an enclave of Western “freedom” first, then having a wild East right in its center of occupied houses and culture centers in Berlin Mitte and neighboring areas, a relatively large number of people live in collective housing projects. These are not small individual houses but large apartment blocks, collectively owned. Freifunk initiator Jürgen Neumann lives in such a housing project which was affected by the OPLAN problem, so that 35 people shared an expensive ISDN connection. After learning about WLAN, he built a wireless bridge to an ISP for his housing association and spread it around the block. Other people who were already experimenting with wireless networks before BerLon were c-base and Elektra.

Another working group dealt with the question of how to define the wireless networking equivalent to the licensing model of Free Software, the General Public License (GPL). From Berlin, Florian Cramer, an expert on Free Software topics, joined this discussion. This issue about a licensing model for Free Networks caused us quite some headache at BerLon, and we did not really find a solution there, but managed to circle in on the subject enough to finish the Pico Peering Agreement at the next meeting in Copenhagen.

At BerLon, Krag and Büttrich also reported about their engagements in Africa. There, well meaning initiatives trying to work with Free and Open Source technology often meet socially difficult and geographically rugged environments.

I can't claim to know in detail what happened in the other working group, the one on networking in Berlin, but the result is there for everyone to see. This was the moment of the inception of Freifunk, the German version of wireless community networking. Freifunk (which, in a word-by-word translation means simply “free radio transmission”) is today one of the most active wireless community networking initiatives in the world. Ironically, while today Consume is defunct, Freifunk became a fantastic success story. With German “Vorsprung durch Technik” Freifunk volunteers managed to contribute significantly to the praxis of wireless community networks. In particular, the Freifunk Distribution and the adoption and improvement of mesh networking technology contributed significantly to inter-networking technology. Freifunk's existence, vibrant and fast growing in the year 2014, is testimony also to the social viability of the Consume idea.

However, I am not claiming that Freifunk simply carried out what Consume had conceived. This would be a much too passive transmission model. Freifunk, just like Guifi, contributed significant innovations of its own. I am also not claiming that Freifunk jumped out of the BerLon meeting like the genie out of the bottle. A number of significant steps were necessary. However, it is also undeniably the case that BerLon provided the contact zone between Berlin and London. This set into motion a process which would eventually lead to a large and successful community network movement.

Jürgen Neumann and a few other people from Berlin decided to hold a weekly meeting, WaveLöten (wave soldering), every Wednesday at c-base starting at 23.10.2002, which was very soon after BerLon. WaveLöten was an important ignition for Freifunk in Berlin. As Neumann said, the lucky situation was that there was a group of people who understood the technical and social complexity of this and each started to contribute to the shared project of the network commons – Bruno Randolf, Elektra, cven (c-base Sven), Sven Ola Tücke and others on the technical side, Monic Meisel, Jürgen, Ingo, and Iris on the organisational and communicative side.

What are the reasons that Freifunk could thrive in Berlin and Germany and while Consume lost its dynamic in the UK? The answer is not simple, so I am just pointing at this question here. Which will pop up throughout this book. What makes a wireless community network sustainable? Why do some communities thrive and grow while others fall asleep?

Copenhagen Interpolation and the Pico Peering Agreement

BerLon was followed, on March 1st and 2nd 2003, by the Copenhagen Interpolation. On this occasion the Pico Peering Agreement was brought to a satisfactory level. I am happy, because I contributed to writing it, and as this story has developed since, it has found some implementation. The Denmark meeting was also quite small. There were people from Locustworld, the Wire.Danes, Malcolm Matson and Jürgen Neumann, Ingo Rau and Iris Rabener from Berlin. They decided in Copenhagen to hold the first Freifunk Summer Convention in Berlin in September 2003.

At BerLon we had discussed the social dimensions of free networking. What were the “social protocols” of free networking? The answer was to be given by the Pico Peering Agreement, a kind of rights bill for wireless community networking.

It had all begun with discussions how to improve the NodeDB. James Stevens expressed his desire a node owner could also choose a freely configurable license – to create a bespoke legal agreement on the fly for his network on the basis of a kind of licensing kit. The node owner should be able to choose from a set of templates to make it known to the public what their node offered at which conditions. This work should be done with the help of lawyers so that node owners could protect themselves. This seemed a good idea but was way to complicated for what our group was able to fathom at the time. We needed something much simpler, something that expressed the Free Network idea in a nutshell.

The success of Free Software is often attributed to the “legal hack,” the GPL. This is a software license which explicitly allows it to run, copy, use and modify software, as long as the modified version is again put under the GPL. This “viral model” is understood to have underpinned the success of Free Software. Today, I am not so sure anymore if this is really the main reason why Free Software succeeded.

Maybe there were many other reasons, such as that there was a need for it, that people supported it with voluntary labour, or that the development model behind Free Software, the co-operative method, simply resulted in better software than the closed model of proprietary software with its top-down hierarchical command system. Anyway, we thought that Free Networks needed an equivalent to the GPL in order to grow. But how to define such an equivalent?

With software, there is one definitive advantage: once the first copy exists, the costs of making additional copies and disseminating them through the net is very low. Free Networks are an entirely different affair: they need hardware which costs money, this hardware is not just used indoors but also outdoors and is exposed to weather and other environmental influences. Free Networks can not really be free as in gratis. They need constant maintenance and they incur not inconsiderable cost.

The crib to get there was the sailing boat analogy. If there are too many sailing boats at a marina, so that not all of them can berth at the pier, boats are berthed next to each other. If you want to get to a boat that is further away from the pier, you necessarily have to step over other boats. It has become customary that it is allowed to walk over other boats in front of the mast. You don't pass at the back, where the more private areas of the boat are – with the entrance to the cabins and the steering wheel – but in front of the mast. In networking terms that would be the public, non-guarded area of a local network, also known as the demilitarized zone (DMZ).

We agreed that it was conditional for participation in a free network that every node owner should accept to pass on data destined for other nodes without filtering or discriminating. We can claim that we defined what today is called network neutrality as centerpiece of the Copenhagen Interpolation of the Pico Peering Agreement: http://www.picopeer.net/PPA-en.html.

While it is important, and I am happy to have contributed to it, I see things slightly different today. I think the real key to Free Networks is the understanding of the network as commons. The freedom in a network cannot be guaranteed by any license but only by the shared understanding of the network commons. The license, however, is an important additional device.

Fly Freifunk Fly! (Chapter 2, part 2, draft)

The Copenhagen Interpolation had induced confidence into the very small number of participants, including a delegation of three from Berlin. In Berlin, the Domain Freifunk.net was registered in January 2003. The name was coined by Monic Meisel and Ingo Rau over a glass of red wine. Their initial impulse, according to Monic Meisel, was to create a website to spread the idea and make the diverse communities that already existed visible to each other. They wanted a domain name that should be easily understood, a catchy phrase that transported the idea.

Early Map of Berlin Backbone, courtesy Freifunk

Freifunk is a good name. It carries the idea of freedom and the German word “funk” has more emotional pull than “radio.” Funk is funky. The German word “Funke” means spark. The reason is that early radios actually created sparks to make electromagnetic waves. “Funken” thus means both, to create sparks and make a wireless transmission. Meisel, who at the time worked for a German web agency, also created the famous Freifunk Logo and the visual identity of the website.

Freifunk Logo by Monic Meisel, image courtesy Freifunk

It seems that Freifunk took off because of a combinaton of reasons. It quickly found support among activists all over Germany, not just Berlin. It had people, who had a good understanding of technology and made the right decisions. And Freifunk did very good PR from the start. Jürgen Neumann quickly emerged as a spokesperson for the fledgling movement. However, he could always rely on other people around him to communicate the idea through a range of different means. Freifunk from the start was more like a network of people than Consume has ever been. When James Stevens decided to stop promoting Consume, it ceased to exist as a nationwide UK network of networks.

In spring and summer 2003 the Freifunk germ was sprouting in Berlin. I was writing my German book and started to put draft chapters into the Freifunk Wiki. Freifunk initially grew quickly in Berlin, in particular in areas that had the OPAL problem and thus could not get broadband via ADSL. AS the Wayback Machine shows

In June 2003 the Open Culture conference, curated by Felix Stalder in Vienna, brought together a number of wireless community network enthusiasts. There, Eben Moglen, the lawyer who had helped write the GPL, gave a rousing speech. His notes consisted of a small piece of paper on which he had written:

free software – free networks – free hardware.

Eben Moglen at OpenCultures conference 2003; Image courtesy t0 / WorldInformation.org

The holy trinity of freedom of speech and participatory democracy in the early 21st century. His speech was based on the Dotcommunist Manifesto which he had published earlier that year. Moglen skillfully paraphrased the communist manifesto by Marx and Engels, writing “A Spectre is haunting multinational capitalism--the spectre of free information. All the powers of “globalism” have entered into an unholy alliance to exorcize this spectre: Microsoft and Disney, the World Trade Organization, the United States Congress and the European Commission.“ Moglen argued that advocates of freedom in the new digital society were inevitably denounced as anarchists and communists, while actually they should be considered role models for a new social model, based on ubiquitous networks and cheap computing power. His political manifesto posited the digital creative workers against those who merely accumulate and hoard the products of their creative labour.

While sharply polemical and as such maybe sometimes a bit black and white in its argumentation, Moglen's Dotcommunist Manifesto is correct insofar as it outlays a social conflict which characterizes our time and is still unresolved. The new collaborative culture of the Net would in principle enable a utopian social project, where people can come together to communicate and create cultural artifacts and new knowledge freely. This world of producers he juxtaposes with another world which is still steeped in the thinking of the past, which clings on to the notion of the production of commodities and which seeks to turn into commodities things that simply aren't. This is the world of governments, of corporations and lobbyists who make laws in their own interest which curtail the freedom and creative potential of the net.

There is no reason why a network should be treated as a commodity. The notion of access to the Internet is, as the free network community argues, a false one. The Internet is not a thing to which one gets sold access by a corporation. As a network of networks, everybody who connects to it can become part of it. Every receiver of information can also become a producer and sender of information. This is realized on the technical infrastructural layer of the net, but it has not yet transpired to mainstream society.

Freifunk Summer Convention 2003

In September 2003 the first Freifunk Summer Convention FC03 happened in Berlin at c-base. This self-organised memorable event, from September 12 to 14, brought together a range of people and skills which gave some key impulses to the movement to build the network commons. Among the people who had joined by their own volition were activists from Djurslands. This is a district in the north east of Denmark, a rural area with economic and demographic problems. Djurslands.net demonstrated for the first time that you could have a durable large scale outdoor net with a large number of nodes. The guys from Djurslands.net brought a fresh craftsman approach to free networking, with solidly welded cantennas (antenna made from empty food can). At the Freifunk convention it was decided to have the next community network meeting in Djursland in 2004, which turned out to become a major international meeting of community networkers in Europe.

Map of Djurslands.net

According to conflicting reports at FC03 Bruno Randolf showed the mesh-cube, a technology he developed for a company in Hamburg. However, according to a recent entry on the timeline it was only after FC03 that the development of the Meshcube began in serious. At the time, Julian Priest wrote in the Informal Wiki:

"Bruno Randolf ran mesh routing workshop. After a good discussion covering the main mesh protocols and solutions, aodv, mobile mesh, scrouter, and meshap, around 10 - 15 linux laptops were pressed into service as mesh nodes using the mobile mesh toolset. Tomas Krag crammed a couple of wireless cards into his laptop, (which kind of fitted), and ran the border router and others stretched the network around the buildings. Many discussions about how to assign ip addresses in the mesh followed, maybe ipv6 mobile ip and zero conf can be ways forward here. Bruno demoed the jaw dropping 4G mesh cube.. 4 cm cube sporting up to 4 radios, smc type antenna connectors, a 400 Mhz mips 32Mb flash 64M ram, with power over ethernet and usb, currently running debian. A space to watch for sure."

The Meshcube made use of industrial small chips optimized for running an embedded Linux distribution. It worked with the AODV routing protocol (CHECK). Another early protocol was OLSR developed by Andreas Tønnesen as a master thesis project at the university graduate center in Oslo. However, it seems at FC03 Mobile Mesh which was discussed and tested. See this entry on Mesh, probably by Elektra, on meshing on the early Freifunk Wiki.

Thus it is confirmed that on a mild day in September 2003 in Berlin, a couple of dozen of geeks could be seen walking around the streets with laptops making, to the ordinary passers-by, incomprehensible remarks about pings and packets. This was the beginning of a long and fruitful engagement of free network communities with mesh routing protocols. (see also This report from 2003.

Shortly after FC03, the Förderverein Freie Netzwerke was founded, a not-for-profit organisation whose aim was the furthering of wireless community networks. The convention had also mobilized a television crew, who made this short film (in German) Real Video-Stream Polylux TV http://brandenburg.rbb-online.de/_/polylux/aktuell/themen_jsp.html

It shows a number of free network advocates including this author at a slightly more youthful age.

As the video makes evident, Freifunk from the start advertised itself as a social project which is about communication and community. Freifunk created an efficient set of tools to be picked up as a kind of community franchise model, as Jürgen Neumann calls it. There is the Freifunk Website with a strong visual identity, and the domain name, which also works as an ESSID of the actual networks. Everybody can pick up a Freifunk sub-domain and start a project in a different locality. Freifunk initially grew out of Berlin's creative new media scene, so that from the very start interesting videos and other new media content was produced.